The thing about these deprecated tools is that the replacements either suck, are too convoluted, don’t give you the same info, or are overly verbose/obtuse.

ifconfig gave you the most relevant information for the network interfaces almost like a dashboard: IP, MAC address, link status, TX/RX packet counts and errors, etc. You can get that with ip but you’ve got to add a bunch of arguments, make multiple calls with different arguments, and it’s still not quite what ifconfig was.

Similarly, iwconfig gave you that same “dashboard” like information for your wireless adapters. I use iw to configure but iwconfig was my go-to for viewing useful information about it. Don’t get me started on how much I hate iw’s syntax and verbosity.

They can pry scp out of my cold dead hands.

At least nftables is syntax-compatible.

I do!

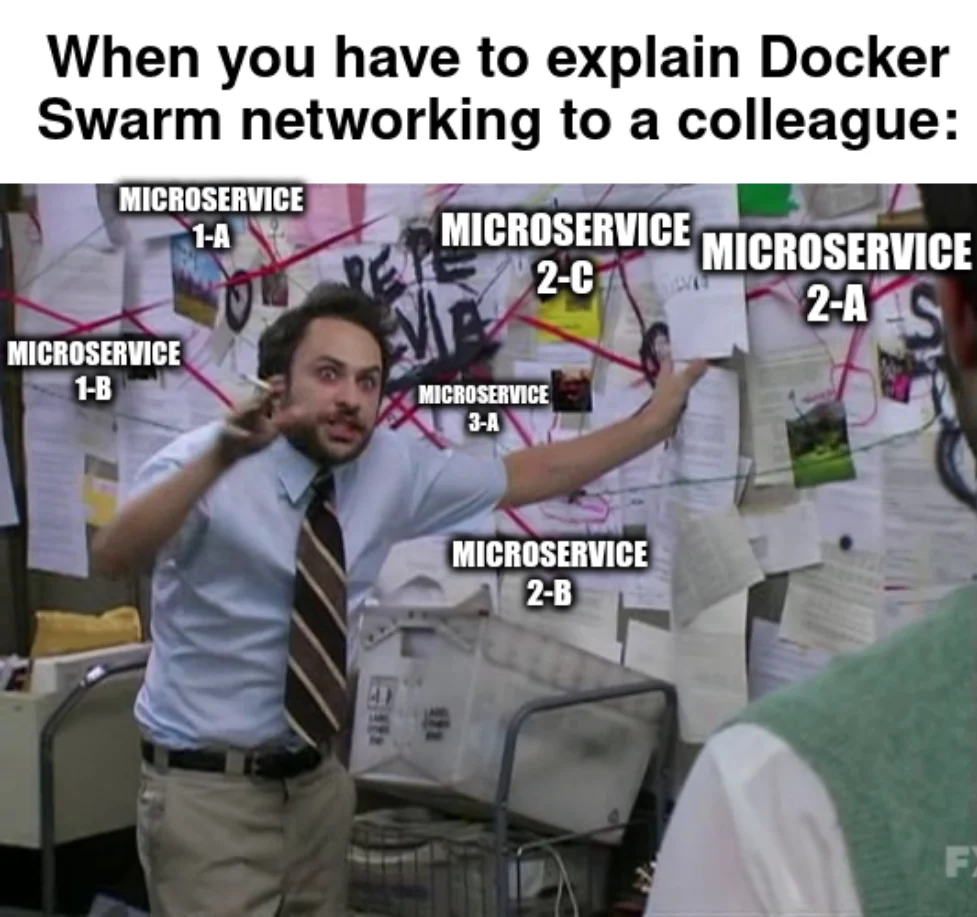

Kubernetes is a nightmare and overkill for most things we need to run, and Docker Swarm is super easy to setup and maintain.

We only use it for one application, though. The app needs to scale horizontally and scale up and down with demand, so I put together a 6 node swarm cluster just for it. Works great, though the auto scaling required some helper scripting.